Creating integrations with Logic Apps (or Power Automate) and using the File System action through a gateway to your own server can get really tricky if you are processing files that are over 20 MB. Quite often the files are indeed a bit larger than that.

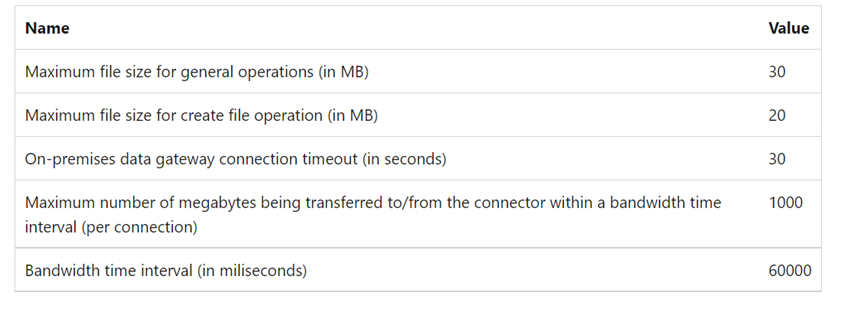

File System action limits:

So, how to process files larger than 20 MB? I have tried multiple different ways all the way from zipping files, using self-hosted Logic Apps (not consumption-based) to chunking etc, but there’s no way around the file size limit.

One way that I’ve resorted to using is to take advantage of Azure Blob storage. Instead of even trying to create the files on a server, I’m just creating them in a blob container. Then by using a PowerShell script, I have scheduled a recurring task with Windows Task Scheduler to read the files from Azure Blob storage to local folder on the server.

Here’s my solution with PowerShell:

1. Create SAS token for your blob storage, give it the correct permissions for file processing (there are also other ways how to authenticate, but I’m using the SAS token here for simplicity’s sake).

2. Then, install Az.Storage PowerShell module if you dont have done so (remember to run PowerShell in admin mode):

Install-Module -Name Az.Storage -AllowClobber -Force

3. Once installed, run the following script with your own parameters:

# Set variables

$storageAccountName = “<integrationstorageaccountnamehere>”

$containerName = “<containernamehere>”

$folderPath = “<BlobFolderNamehere>”

$localDirectory = “c:\data\”

$processedFolder = “BlobFolderNameProcessed”

$sasToken = “?sv=2021-10…..”

# Create the storage context using the SAS token

$context = New-AzStorageContext -StorageAccountName $storageAccountName -SasToken $sasToken

# Get all files in the specified folder

$blobs = Get-AzStorageBlob -Container $containerName -Prefix $folderPath -Context $context

# Download each file to the local directory

foreach ($blob in $blobs) {

$blobName = $blob.Name

$destinationPath = Join-Path -Path $localDirectory -ChildPath $blobName

Get-AzStorageBlobContent -Container $containerName -Blob $blobName -Destination $destinationPath -Context $context -Force

# Move the processed file to the “TietoProcessed” folder

$destinationBlobName = “$processedFolder/$blobName”

Start-AzStorageBlobCopy -SrcContainer $containerName -SrcBlob $blobName -DestContainer $containerName -DestBlob $destinationBlobName -Context $context

if ($?) {

Write-Output “File ‘$blobName’ processed and transferred to ‘$processedFolder’ folder.”

} else {

Write-Output “Failed to transfer file ‘$blobName’ to ‘$processedFolder’ folder.”

}

# Delete the original file after it has been processed and transferred

Remove-AzStorageBlob -Container $containerName -Blob $blobName -Context $context

}

Write-Output “File processing complete.”

4) Test that it works, then save it as ps-script file and schedule it with Task Manager.

If you have any other ideas on how to tackle this, feel free to comment. Thanks!